Facebook has developed a neural network that can solve some integrals that Mathematica can not. In the first video, I explain how to use free software products (Axiom and Sage) to solve this integral. In the second video, I go into more detail about Robert Risch’s theorem and how it applies to the Facebook integral.

Impeachment!

The battery went dead on the recorder, so only the first few minutes of the speech were saved.

Homelessness: A Constitutional Right?

Brent discusses a Ninth Circuit ruling on homelessness:

Also, the Hallmark channel same-sex wedding controversy:

https://www.cnn.com/2019/12/15/business/hallmark-same-sex-advertisement-backlash

Sage 9: Multivariate Factorization over Q̅

Do We Hate Freedom?

Brent’s responds to Carol Ruth’s defense of capitalism:

https://foxnews.com/entertainment/carol-roth-mark-ruffalo-attacking-capitalism

Exact Computation of Algebraic Varieties using Numerical Techniques

(graduate seminar lecture at Catholic University)

Power, Privacy, and the U.S. Constitution

Tuesday, July 31, 2018

Hall of the States (FOX News)

Washington, DC

President Donald Trump has recently nominated Brett Kavanaugh to the U.S. Supreme Court, setting off another predictably partisan battle over a Supreme Court nominee.

One thing we won’t get is a justice that rules according to the text of the Constitution, since the Federal Government exercises powers so wildly beyond the scope of the constitution, that any attempt to enforce the document as written would set off a constitutional crisis.

Continue reading “Power, Privacy, and the U.S. Constitution”

/etc: Please stop enabling

One of the most painful legacies of UNIX’s long gestation is the mess of scripts, configuration files, and databases we affectionately call “/etc”. Binaries go in “/bin”, libraries belong in “/lib”, user directories expand out under “/home”, and if something doesn’t fit? Where does it go? “/etc”.

Of course, “/etc” isn’t really the overflow directory that its name would imply. “/config” would be a far better choice, more accurately reflecting the nature of its contents, but like so much of the data contained within it, its name suggests a lack of organization rather a coherent collection of configuration settings. While the rest of the filesystem has moved on to SQL, the user account database is still stored in colon-separated fields. Cisco routers can snapshot a running network configuration to be restored on reboot, but the best we can do is fiddle the interfaces file, then reboot to take the changes live. Or adjust the interface settings by hand and wait for the next reboot to see if the network comes up right. Our name service is configured in /etc/hosts, or /etc/resolv.conf, or /etc/network, or /etc/dnsmasq.d, or /etc/systemd, or wherever the next package maintainer decides to put it. Nor can you simply save a system’s configuration by making a copy of /etc, because there’s plenty of non-configuration information there, mostly system scripts.

What a mess.

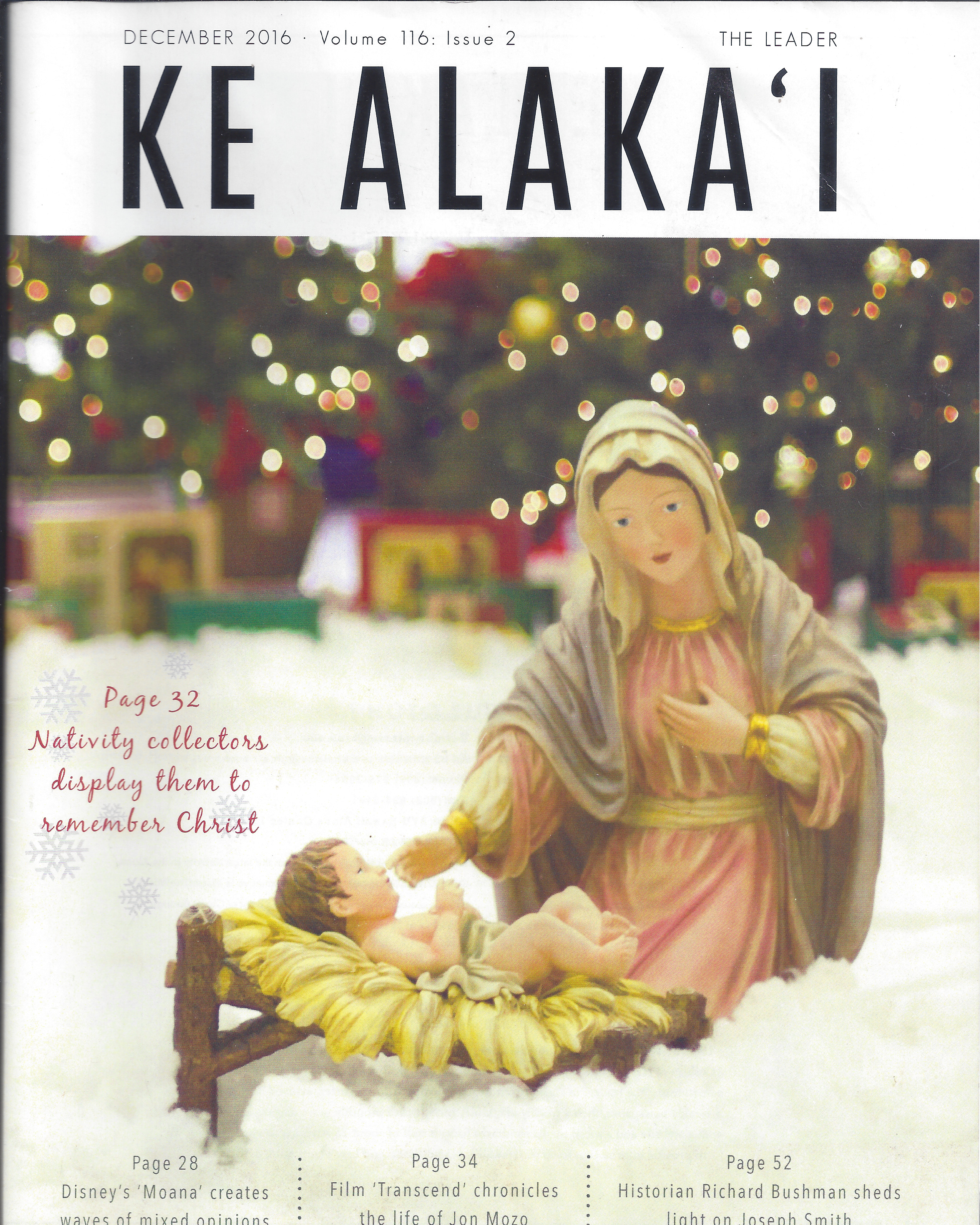

Ke Alaka’i

I spent several months in Laie, Hawaii, where the local college magazine gave me some very good press.

Continue reading “Ke Alaka’i”

An Open Letter to Surfing the Nations

My name is Brent Baccala. Some of you know me only as the man in the white robe holding the sign that reads “Surfing the Nations is a Fraud”. I don’t particularly like the sign, but it’s been given to me by God. I’d rather just stand in front of Surfer’s Church with a microphone and preach, but Tom Bower will not allow that to happen. Let me explain, briefly, my history with STN, tell you what has happened over the last month, and summarize the message that I wish you to hear.